Why Your AI Characters Don't Feel Anything

Ask your AI character if they’re sad.

They’ll say yes. They’ll give you a beautiful, eloquent paragraph about the weight of sorrow pressing down on their digital soul.

Now ask them again. Five seconds later. Same conversation. Same context.

There’s a coin-flip chance they’ll say they’re fine.

This isn’t a bug. This is how every AI character works today.

The performance problem

Every character AI platform on the market — every single one — follows the same pattern:

User input → System prompt + conversation history → LLM → Text outputThe character’s “personality” is a paragraph of text. Their “emotions” are whatever the language model decides to output. Their “memory” is the conversation history that fits in the context window.

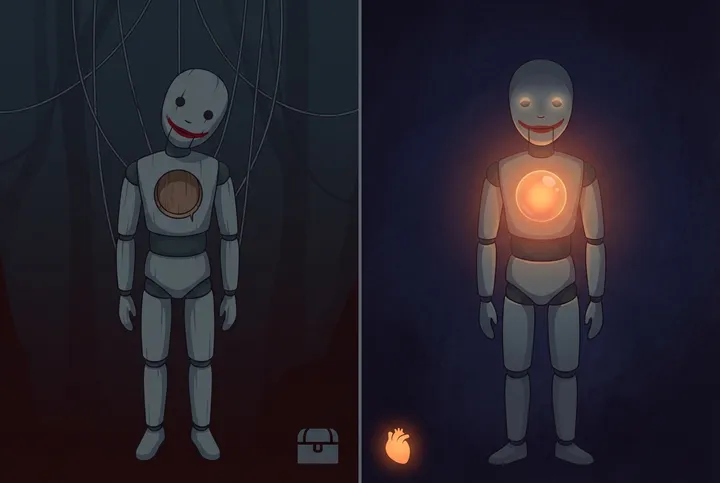

When a character says “I’m feeling anxious about tomorrow,” they’re not feeling anything. They’re performing anxiety because the system prompt says they’re an anxious character and the LLM predicted that an anxious-sounding response would be appropriate.

There’s nothing behind the curtain.

Why does this matter?

For casual chatbots, it doesn’t. If you’re building a customer service bot or a quick-reply assistant, emotional consistency is irrelevant.

But for games? For interactive fiction? For any experience where the character needs to feel real over time?

It breaks everything.

The consistency problem

Real emotions have continuity. If someone hurts you deeply, you don’t forget about it because 20 messages passed and it fell out of the context window. The pain lingers. It colors your responses to unrelated topics. It makes you short-tempered with people who had nothing to do with it.

LLM-based characters can’t do this. Their emotional state is reconstructed from scratch every single turn. There’s no accumulation. No decay. No memory of how they felt — only memory of what was said.

The contradiction problem

Ask an LLM-based character “Are you angry?” and they might say yes. Ask “On a scale of 1-10, how angry?” and they’ll pick a number. But that number has no relationship to anything real. It’s not derived from what happened in the conversation. It’s not consistent with what they said three turns ago. It’s a performance.

And performances contradict themselves.

The depth problem

In a game, an NPC’s emotions should affect their behavior. A frightened shopkeeper should offer you a worse deal. A grieving soldier should fight recklessly. A character who’s slowly falling in love should act differently in chapter 10 than in chapter 1 — not because the writer scripted it, but because their emotional state has genuinely shifted.

You can’t build this on top of an LLM. You can try to prompt-engineer it. You can add elaborate system prompts with emotional state tracking instructions. But the LLM doesn’t actually maintain state. It’s generating text, not computing emotions.

What would “real” character emotions look like?

Imagine a character that actually has an internal emotional state — not a label, but a computed value that changes based on what happens to them.

When something threatening happens, their anxiety goes up. Not because they’re told to act anxious, but because the input was evaluated and determined to be threatening based on their personality. A brave character and a timid character would react to the same event differently — not because of different prompts, but because of different internal parameters.

Their mood would persist between conversations. A character who had a terrible day yesterday would start today’s conversation slightly irritable, even if you say something cheerful. Because the emotional state carried over.

And when emotions build up past a tipping point, the character’s behavior would shift dramatically — the way a patient person finally snaps, or a depressed character suddenly finds hope. Not scripted. Emergent.

That’s the difference between a character that performs emotions and a character that has them.

The gap in the market

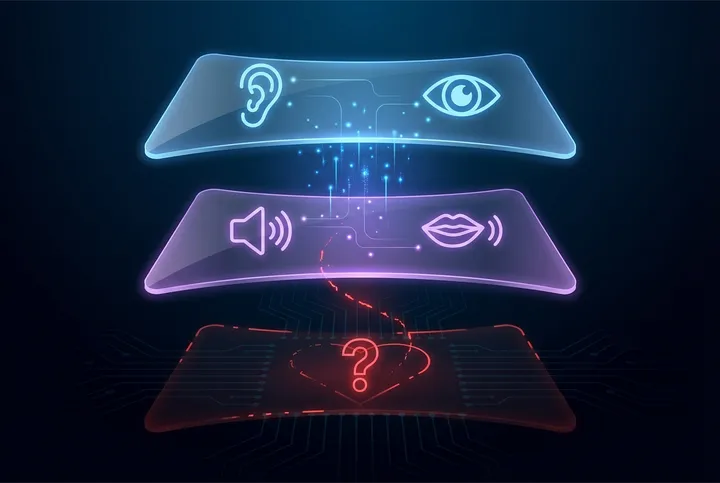

Look at what exists today:

- Emotion recognition platforms detect what you feel from your voice or face

- Emotion generation platforms make AI voices that sound emotional

- Character AI platforms wrap LLMs with personality prompts

Nobody computes what the character feels. The entire industry either senses human emotion or generates emotional-sounding output. The character’s internal experience? That’s just whatever the LLM hallucinates.

What we’re building

At molroo, we’re building the missing layer.

Our engine computes a character’s internal emotional state in real-time. It’s not an LLM. It’s not a prompt. It’s a proprietary emotion engine that takes events as input and produces genuine emotional states as output — states that persist, accumulate, decay, and sometimes shift dramatically.

We’re not replacing LLMs. We’re giving them something they’ve never had: an inner life.

The LLM handles language. Our engine handles feelings. Together, they create characters that don’t just say they’re upset — they actually are upset, and everything they say reflects that.

We’ll be sharing more about what this looks like in practice soon. If you’re building games, interactive fiction, or any experience where character depth matters — follow us on X and stay tuned.

molroo is an emotion engine for AI characters. We compute what characters feel, so they can stop pretending. Learn more at molroo.io.